A Hidden Mathematical Rule Governs the Distribution of Neurons in the Brain

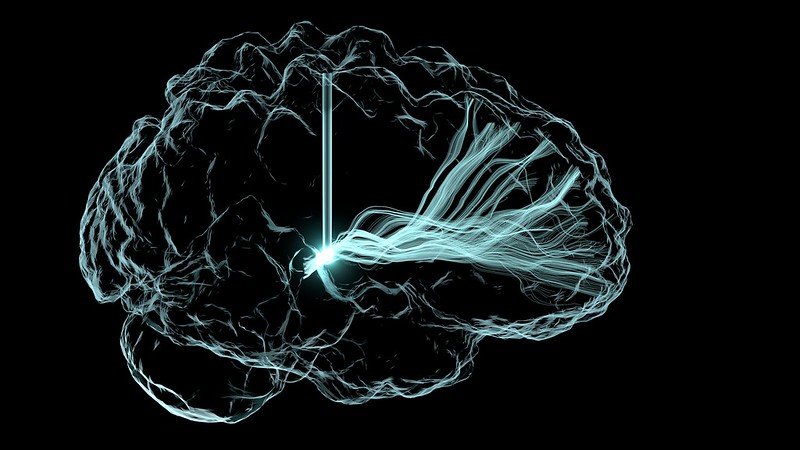

Human Brain Project (HBP) researchers have uncovered how neuron densities are distributed across and within cortical areas in the mammalian brain. As reported in Cerebral Cortex, they have revealed a fundamental organisational principle of cortical cytoarchitecture: the ubiquitous lognormal distribution of neuron densities.

Numbers of neurons and their spatial arrangement play a crucial role in shaping the brain’s structure and function. Yet, despite the wealth of available cytoarchitectonic data, the statistical distributions of neuron densities remain largely undescribed. This new study from the HBP at Forschungszentrum Jülich and the University of Cologne (Germany) study advances our understanding of the organisation of mammalian brains.

The team accessed 9 publicly available datasets of seven species: mouse, marmoset, macaque, galago, owl monkey, baboon and human. After analysing the cortical areas of each, they found that neuron densities within these areas follow a consistent pattern – a lognormal distribution, pointing to a fundamental organisational principle underlying the densities of neurons in the mammalian brain.

A lognormal distribution is a statistical distribution characterised by a skewed bell-shaped curve. It arises, for instance, when taking the exponential of a normally distributed variable. It differs from a normal distribution in several ways. Most importantly, the curve of a normal distribution is symmetric, while the lognormal one is asymmetric with a heavy tail.

These findings are relevant for modelling the brain accurately. “Not least because the distribution of neuron densities influences the network connectivity,” says Sacha van Albada, leader of the Theoretical Neuroanatomy group at Forschungszentrum Jülich and senior author of the paper. “For instance, if the density of synapses is constant, regions with lower neuron density will receive more synapses per neuron,” she explains. Such aspects are also relevant for the design of brain-inspired technology such as neuromorphic hardware.

“Furthermore, as cortical areas are often distinguished on the basis of cytoarchitecture, knowing the distribution of neuron densities can be relevant for statistically assessing differences between areas and the locations of the borders between areas,” van Albada adds.

These results are in agreement with the observation that surprisingly many characteristics of the brain follow a lognormal distribution. “One reason why it may be very common in nature is because it emerges when taking the product of many independent variables,” says Alexander van Meegen, joint first author of the study. In other words, the lognormal distribution arises naturally as a result of multiplicative processes, similarly to how the normal distribution emerges when many independent variables are summed.

“Using a simple model, we were able to show how the multiplicative proliferation of neurons during development may lead to the observed neuron density distributions” explains van Meegen.

According to the study, in principle, cortex-wide organisational structures might be by-products of development or evolution that serve no computational function; but the fact that the same organisational structures can be observed for several species and across most cortical areas suggests that the lognormal distribution serves some purpose.

“We cannot be sure how the lognormal distribution of neuron densities will influence brain function, but it will likely be associated with high network heterogeneity, which may be computationally beneficial,” says Aitor Morales-Gregorio, first author of the study, citing previous works that suggest that heterogeneity in the brain’s connectivity may promote efficient information transmission. In addition, heterogeneous networks support robust learning and enhance the memory capacity of neural circuits.

Source: Human Brain Project