‘Healthy’ Vitamin B12 Levels not Enough to Ward off Neuro Decline

Meeting the minimum requirement for vitamin B12, needed to make DNA, red blood cells and nerve tissue, may not actually be enough – particularly if for older adults. It may even put them at risk for cognitive impairment, according to a study published in Annals of Neurology.

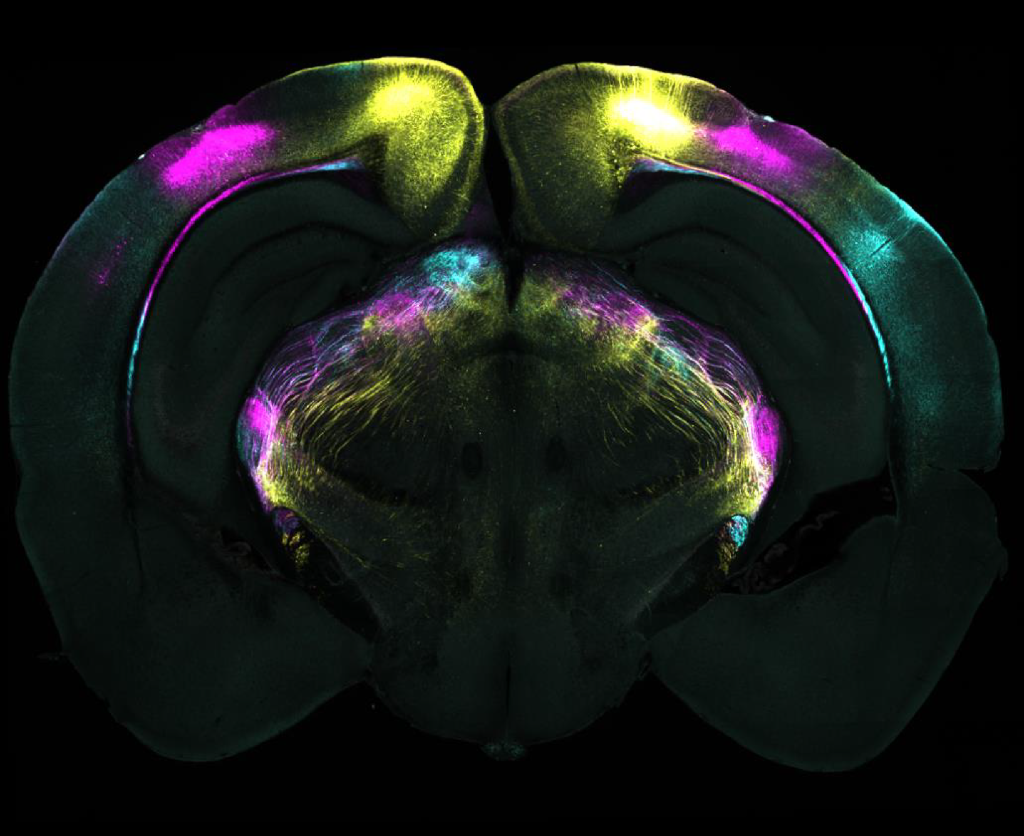

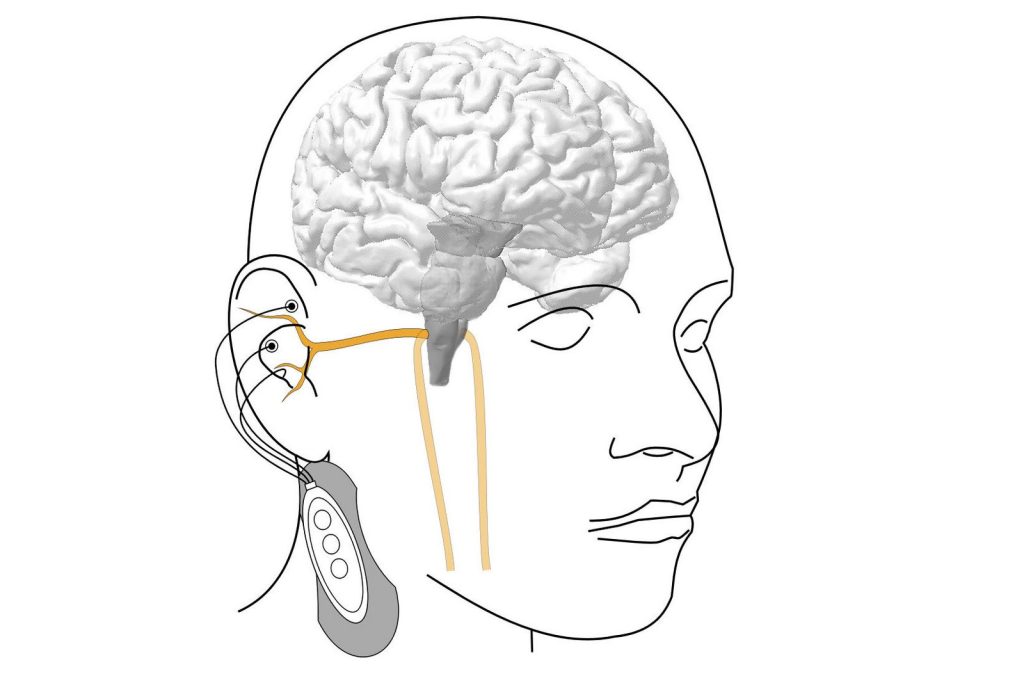

The research found that older, healthy volunteers, with lower concentrations of B12, but still in the normal range, showed signs of neurological and cognitive deficiency. These levels were associated with more damage to the brain’s white matter – the nerve fibres that enable communication between areas of the brain – and test scores associated with slower cognitive and visual processing speeds, compared to those with higher B12.

The UC San Francisco researchers, led by senior author Ari J. Green, MD, of the Departments of Neurology and Ophthalmology and the Weill Institute for Neurosciences, said that the results raise questions about current B12 requirements and suggest the recommendations need updating.

“Previous studies that defined healthy amounts of B12 may have missed subtle functional manifestations of high or low levels that can affect people without causing overt symptoms,” said Green, noting that clear deficiencies of the vitamin are commonly associated with a type of anaemia. “Revisiting the definition of B12 deficiency to incorporate functional biomarkers could lead to earlier intervention and prevention of cognitive decline.”

Lower B12 correlates with slower processing speeds, brain lesions

In the study, researchers enrolled 231 healthy participants without dementia or mild cognitive impairment, whose average age was 71. They were recruited through the Brain Aging Network for Cognitive Health (BrANCH) study at UCSF.

Their blood B12 amounts averaged 414.8pmol/L, well above the U.S. minimum of 148pmol/L. Adjusted for factors like age, sex, education and cardiovascular risks, researchers looked at the biologically active component of B12, which provides a more accurate measure of the amount of the vitamin that the body can utilize. In cognitive testing, participants with lower active B12 were found to have slower processing speed, relating to subtle cognitive decline. Its impact was amplified by older age. They also showed significant delays responding to visual stimuli, indicating slower visual processing speeds and general slower brain conductivity.

MRIs revealed a higher volume of lesions in the participants’ white matter, which may be associated with cognitive decline, dementia or stroke.

While the study volunteers were older adults, who may have a specific vulnerability to lower levels of B12, co-first author Alexandra Beaudry-Richard, MSc, said that these lower levels could “impact cognition to a greater extent than what we previously thought, and may affect a much larger proportion of the population than we realize.” Beaudry-Richard is currently completing her doctorate in research and medicine at the UCSF Department of Neurology and the Department of Microbiology and Immunology at the University of Ottawa.

“In addition to redefining B12 deficiency, clinicians should consider supplementation in older patients with neurological symptoms even if their levels are within normal limits,” she said. “Ultimately, we need to invest in more research about the underlying biology of B12 insufficiency, since it may be a preventable cause of cognitive decline.”