Bacteria Invade Brain after Implanting Medical Devices

Brain implants hold immense promise for restoring function in patients with paralysis, epilepsy and other neurological disorders. But a team of researchers at Case Western Reserve University has discovered that bacteria can invade the brain after a medical device is implanted, contributing to inflammation and reducing the device’s long-term effectiveness.

The groundbreaking research, recently published in Nature Communications, could improve the long-term success of brain implants now that a target has been identified to address.

“Understanding the role of bacteria in implant performance and brain health could revolutionize how these devices are designed and maintained,” said Jeff Capadona, Case Western Reserve’s vice provost for innovation, the Donnell Institute Professor of Biomedical Engineering and senior research career scientist at the Louis Stokes Cleveland VA Medical Center.

Capadona’s lab led the study, which examined the presence of bacterial DNA in the brains of mouse models implanted with microelectrodes.

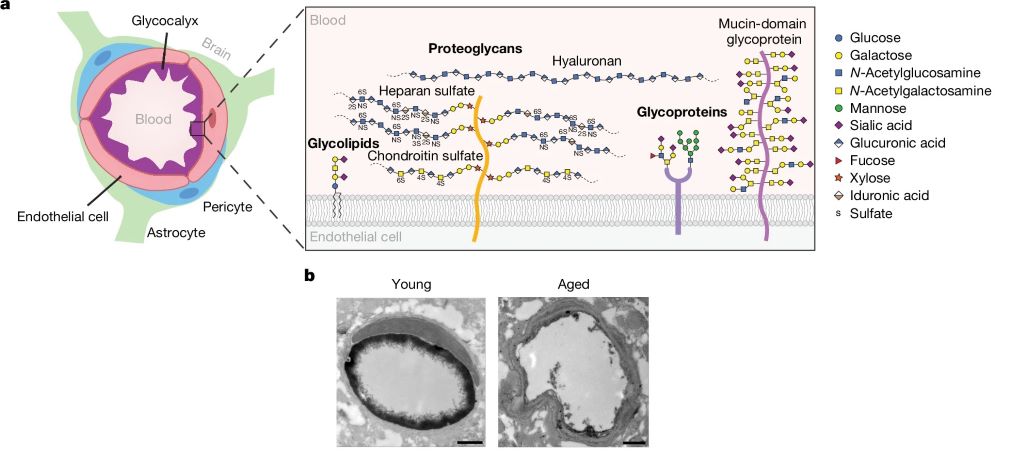

To their surprise, researchers found bacteria linked to the gut inside the brain. The discovery suggests that a breach in what is known as “the blood-brain barrier,” caused by implanting the device, could allow microbes to enter.

“This is a paradigm-shifting finding,” said George Hoeferlin, the study’s lead author, who was a biomedical engineering graduate student at Case Western Reserve in Capadona’s lab. “For decades, the field has focused on the body’s immune response to these implants, but our research now shows that bacteria—some originating from the gut—are also playing a role in the inflammation surrounding these devices.”

In the study, mouse models treated with antibiotics had reduced bacterial contamination and the performance of the implanted devices improved—although prolonged antibiotic use proved detrimental.

The discovery’s implications go beyond device failure. Some of the bacteria found in the brain have been linked to neurological diseases, including Alzheimer’s, Parkinson’s and stroke.

“If we’re not identifying or addressing this consequence of implantation, we could be causing more harm than we’re fixing,” Capadona said. “This finding highlights the urgent need to develop a permanent strategy for preventing bacterial invasion from implanted devices, rather than just managing inflammation after the fact. The more we understand about this process, the better we can design implants that work safely and effectively.”

Capadona said his lab is now expanding the research to examine bacteria in other types of brain implants, such as ventricular shunts used to treat hydrocephalus, an abnormal buildup of fluid in the brain.

The team also examined the faecal matter of a human subject implanted with a brain device and found similar results.

“This finding stresses the importance of understanding how bacterial invasion may not just be a laboratory phenomenon, but a clinically relevant issue,” said Bolu Ajiboye, professor in biomedical engineering at the Case School of Engineering and School of Medicine and scientist at the Cleveland VA Medical Center. “Through our strong translational pipeline between CWRU and the VA, we are now investigating how this discovery can directly contribute to safer, more effective neural implant strategies for patients.”

Source: Case Western Reserve University