How Human Brain Functional Networks Emerge and Develop during the Birth Transition

Shedding light on the growth trajectory of global functional neural networks before and after birth

Brain-imaging data collected from foetuses and infants has revealed a rapid surge in functional connectivity between brain regions on a global scale at birth, possibly reflecting neural processes that support the brain’s ability to adapt to the external world, according to a study published November 19th, in the open-access journal PLOS Biology led by Lanxin Ji and Moriah Thomason from the New York University School of Medicine, USA.

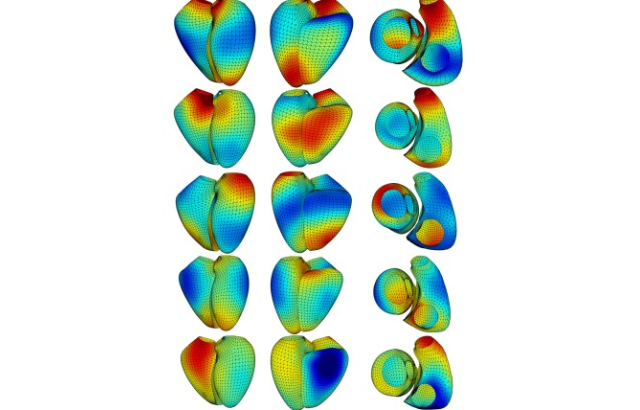

Understanding the sequence and timing of brain functional network development at the beginning of human life is critical. Yet many questions remain regarding how human brain functional networks emerge and develop during the birth transition. To fill this knowledge gap, Thomason and colleagues leveraged a large functional magnetic resonance imaging dataset to model developmental trajectories of brain functional networks spanning 25 to 55 weeks of post-conceptual gestational age. The final sample included 126 foetal scans and 58 infant scans from 140 subjects.

The researchers observed distinct growth patterns in different regions, showing that neural changes accompanying the birth transition are not uniform across the brain. Some areas exhibited minimal changes in resting-state functional connectivity (RSFC) – correlations between blood oxygen level-dependent signals between brain regions when no explicit task is being performed. But other areas showed dramatic changes in RSFC at birth. The subcortical network, sensorimotor network, and superior frontal network stand out as regions that undergo rapid reorganisation during this developmental stage.

Additional analysis highlighted the subcortical network as the only region that exhibited a significant increase in communication efficiency within neighbouring nodes. The subcortical network represents a central hub, relaying nearly all incoming and outgoing information to and from the cortex and mediating communication between cortical areas. On the other hand, there was a gradual increase in global efficiency in sensorimotor and parietal-frontal regions throughout the foetal to neonatal period, possibly reflecting the establishment or strengthening of connections as well as the elimination of redundant connections.

According to the authors, this work unveils fundamental aspects of early brain development and lays the foundation for future research on the influence of environmental factors on this process. In particular, further studies could reveal how factors such as sex, prematurity, and prenatal adversity interact with the timing and growth patterns of children’s brain network development.

The authors add, “This study for the first time documents the significant change of brain functional networks over the birth transition. We observe that growth patterns are regionally specific, with some areas of the functional connectome showing minimal changes, while others exhibit a dramatic increase at birth.”

Provided by PLOS